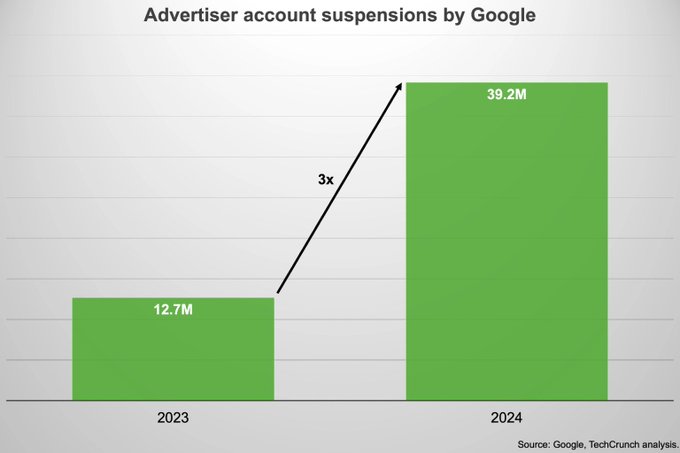

NAIROBI, Kenya — If you were planning to scam your way through Google Ads in 2024, artificial intelligence had other plans. According to Google’s latest Ads Safety Report, the tech giant suspended a jaw-dropping 39.2 million advertiser accounts last year — most before a single ad was ever shown.

And no, this wasn’t some manual review marathon. Google deployed its own army of large language models (LLMs) to sniff out fraudsters faster and smarter than ever before.

LLMs: Google’s AI Sheriff Is in Town

While 2023 saw LLMs charming us with poetry and meal plans, 2024 turned them into enforcers.

Google introduced more than 50 enhancements to its AI models to better detect malicious advertising behavior.

Think smarter algorithms that can now flag sketchy payment details, business impersonation attempts, and shady personalized ad tactics.

The results? Nothing short of staggering: over 5.1 billion bad ads blocked globally. That’s billion — with a “B.” It’s a sweeping effort that highlights just how much damage fraudulent ads can cause if left unchecked.

Impersonators, Deepfakes, and the AI-Scam Evolution

From fake business listings to celebrity deepfakes, the new wave of fraud isn’t your average spam. Google says one disturbing trend on the rise is public figure impersonation — using AI-generated images or voice clones of celebrities to give scams a shiny, trustworthy face.

One unsettling example? A deepfaked Oprah Winfrey pushing dubious self-help courses.

Locally, Kenya hasn’t been spared either, with AI-crafted videos of news anchors peddling fake land deals making rounds on social media.

“We’re seeing an uptick in more sophisticated scams,” Google said, noting that over 700,000 advertiser accounts were permanently banned for such offenses.

📌 Google used AI to suspend over 39M ad accounts suspected of fraudow.ly/VC9W50VC5r3

Google’s AI Playbook: Protecting the Ad Network at Scale

While flashy numbers make headlines, this crackdown underscores something more important: trust.

By proactively targeting accounts before they go live, Google is tightening the noose around digital scam artists and reinforcing its commitment to safer ad spaces.

The majority of suspensions stemmed from abuse of the ad network, trademark violations, and manipulative personalization tactics.

And thanks to the scalability of AI, enforcement has become more precise without sacrificing reach. It’s not just about blocking what’s bad — it’s about preserving what’s good.

Conclusion: AI, Ads, and the New Rules of Engagement

As deepfakes and AI-generated scams grow more sophisticated, Google’s own AI is proving it can keep up — and strike back.

With 39 million advertiser accounts suspended and billions of bad ads wiped off the internet, the message is clear: if you plan to game the ad system, don’t be surprised when AI beats you at your own game.

And if you’re a brand trying to do it right? Rest easy — the AI gatekeepers are working overtime.